- A way to detect fake images of people has come out of the world of astronomy. The researchers used a method to look at galaxies to detect deep fakes. They used something called Gini coefficientwhich measures how the light in an image of a galaxy is distributed among its pixels.

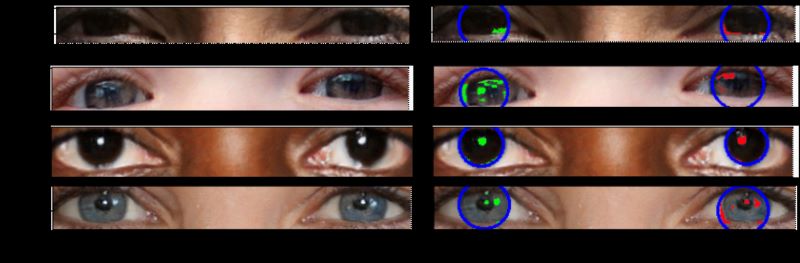

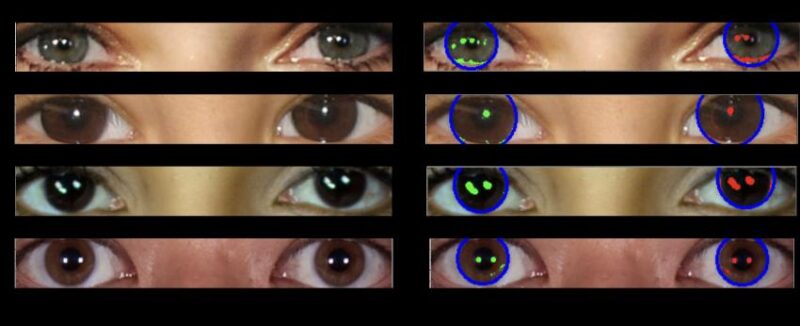

- The method can be used to examine the eyes of people in real and fake images (AI generated images). If the reflections do not match, the image is most likely fake.

- It is not perfect in detecting fake images. But, the researchers said, “this method gives us a baseline, a plan of attack, in the arms race to detect deep forgeries.”

The Royal Astronomical Society published this original article on 17 July 2024. Editing by EarthSky.

How to distinguish an AI-generated image with science

In an era when artificial intelligence (AI) imaging is at the fingertips of the masses, the ability to detect fake photos – especially deep fakes of people – is becoming increasingly important.

What if you could tell just by looking into someone’s eyes?

That’s the compelling finding of new research shared at the Royal Astronomical Society’s National Astronomy Meeting in Hull, UK, which suggests that AI-generated fakes can be spotted by analyzing human eyes in the same way astronomers study photographs of galaxies.

The core of the work, from the University of Hull M.Sc. student Adejumoke Owolabi, is about the reflection in a person’s eyeballs.

If the reflections match, the image is likely that of a real person. If they don’t, they are probably deepfakes.

Kevin Pimbblet is Professor of Astrophysics and Director of the Center of Excellence for Data Science, Artificial Intelligence and Modeling at the University of Hull. Pimblet said:

Eyeball reflections are consistent for the real person, but inaccurate (from a physics point of view) for the fake person.

Like stars in their eyes

The researchers analyzed light reflections on people’s eyes in real and AI-generated images. They then used methods commonly used in astronomy to quantify the reflections and checked for consistency between the left and right eyeball reflections.

Fake images often lack consistency in reflections between each eye, while real images generally show the same reflections in both eyes. Pimblet said:

To measure the shapes of galaxies, we analyze whether they are compact in the center, whether they are symmetrical, and how smooth they are. We analyze the distribution of light.

We detect the reflections in an automated way and drive their morphological features through CAS [concentration, asymmetry, smoothness] and Gini indices to compare similarity between left and right heads.

The findings show that “deepfakes” have some differences between them.

Light distribution

The Gini coefficient is commonly used to measure how the light in an image of a galaxy is distributed among its pixels. Astronomers make this measurement by sorting the pixels that make up the image of a galaxy in ascending order of flux. Then they compare the result with what would be expected from a perfectly even flux distribution.

A Gini value of 0 is a galaxy in which the light is evenly distributed across all pixels in the image. A Gini value of 1 is a galaxy with all light concentrated in a single pixel.

The team also tested CAS parameters, a tool originally developed by astronomers to measure the light distribution of galaxies to determine their morphology, but found that it was not a successful predictor of false eyes. Pimblet said:

It is important to note that this is not a silver bullet for detecting fake images.

There are false positives and false negatives; it won’t take everything. But this method provides us with a baseline, a plan of attack, in the arms race to detect deep fakes.

Bottom line: A method astronomers use to measure light from galaxies can also be used to tell whether a photo of someone is real or a fake AI-generated image.

Via the Royal Astronomical Society

Read more: Is AI to blame for our failure to find alien civilizations?