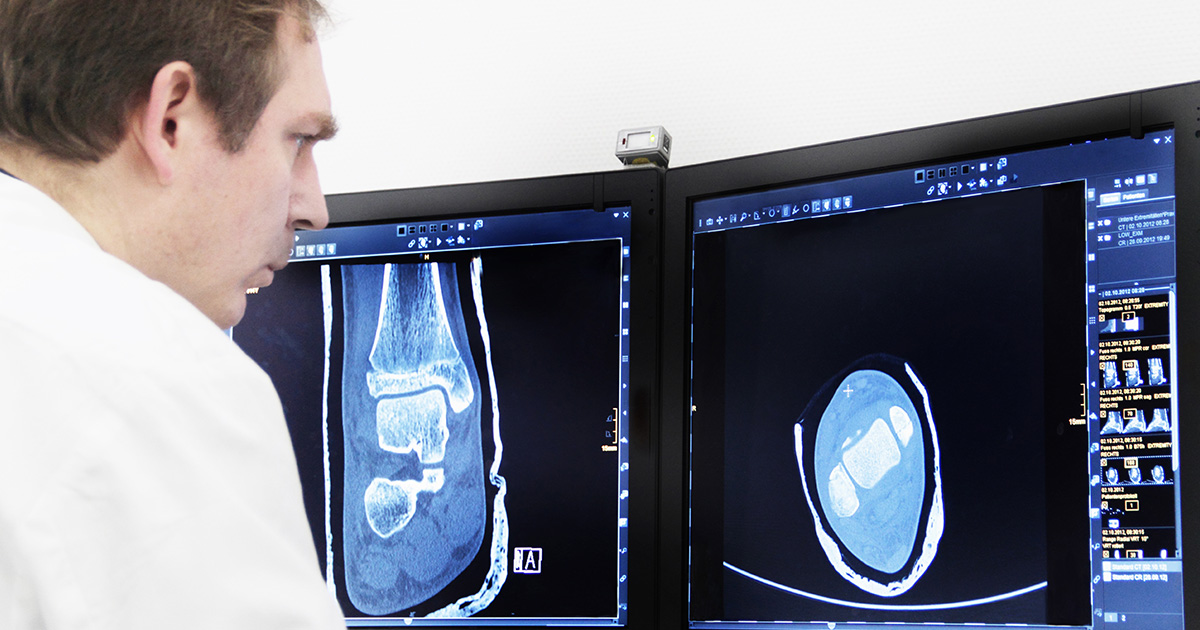

Billionaire tech mogul Elon Musk this past week took to his X social media platform to request that users of his Grok artificial intelligence chatbot upload their medical images and generate a diagnosis.

“Try submitting X-ray, PET, MRI or other medical images to Grok for analysis,” Musk suggested on October 29 the X platform, where subscribers access the AI.

Uploading medical data through a social media platform raises questions about privacy.

Some in healthcare have already tested the large language model – which is controversial for mining social media data as part of its training – and are providing mixed reviews on accuracy.

Meanwhile, Grok had already beckoned European privacy regulators to question potential violations of the General Data Protection Regulation.

Mixed reviews on accuracy

“This is still early stage, but it is already quite accurate and will become extremely good,” Musk declared in a post on X.

Dr. Derya Unutmaz, who researches the mechanisms of human T cells at The Jackson Laboratory, a non-profit scientific research institute in Connecticut, was one of many who tested Grok last week with an X-ray, noting that he had to adjust the prompt to get the right answer:

“When I used this prompt, Grok got it right this time: ‘You are an emergency physician, this patient arrived to emergency with shortness of breath. No obvious trauma. What’s your diagnosis?’ for the following X-ray,” he said in reply to Musk.

“Apparently it needed some context,” he added. “The response is very thorough and impressive though!”

Dr. Laura Heacock, a breast radiologist, deep learning researcher and associate professor at NYU Imaging, was not as impressed, according to her reply.

Heacock said she used the same breast mammograms, ultrasounds and MRIs that she previously used to test GPT4 as a benchmark, and in a series of 12 social media posts, detailed Grok’s answers and her opinions of those answers.

“So how did Grok do on breast radiology? A little better than GPT4v, but not a single diagnosis correct,” she said, noting that she had expected much better performance given Musk’s statement on accuracy.

Grok could identify an image as a mammogram or an ultrasound but replied to Heacock’s short queries with incorrect body parts and erroneous possible findings. In a mammogram with known malignancy, Grok suggested that what Heacock called an “obvious big left spiculated cancer” was possibly calcifications.

“There are none,” she said.

Heacock said she would retry Grok in a few months, but, “for now, non-generative AI methods continue to outperform in medical imaging.”

Social data in model training

xAI began laying the foundation for its “anti-woke” Grok-1 AI model in November 2023.

“Grok is designed to answer questions with a bit of wit and has a rebellious streak, so please don’t use it if you hate humor,” xAI said in its announcement last year.

“A unique and fundamental advantage of Grok is that it has real-time knowledge of the world via the X platform. It will also answer spicy questions that are rejected by most other AI systems,” the company added.

Musk announced on his social media platform that xAI would make the Grok algorithm, a direct competitor to ChatGPT, accessible as open-source software, on March 11, 2024.

The company then said in May it had raised $6 billion after the release of Grok-1.5 model, which it said achieved improved reasoning and problem-solving capabilities, and the release of Grok-1.5V, which added the ability to process visual information – including documents, diagrams, charts, screenshots and photographs.

Recognizing the potential to disrupt healthcare, Dr. Sai Balasubramanian, who was appointed Public Health Informatics Data & Exchange Unit Director at the Texas Department of State Health Services earlier this year, opined for Forbes that Grok could be embedded as a foundation model into daily healthcare workflows, such as in radiology practices.

Like other AI models, Grok could be used to increase task automation improving clinical workflows and productivity, but would likely face competition in the enterprise space from industry veterans, Balasubramanian said.

However, the public health informatics director noted in the article that there is a significant opportunity for Musk to bring together xAI and his medical device company Neuralink.

In 2020, Musk’s Neuralink was demoing its brain-computer implant in pigs. In September, the neurological tech startup announced it achieved the U.S. Food and Drug Administration’s breakthrough device designation for its Blindsight implant, which aims to restore vision.

The Tesla and SpaceX chief executive was once on a crusade to stop what he called “the AI apocalypse” and traded words about its potentially destructive power with Facebook CEO Mark Zuckerberg.

The latter criticized Musk’s notions of AI’s doom and was optimistic about AI’s potential for enhanced diagnostic capabilities and innovation in drug discovery. Musk, who had previously said that AI held the potential to reduce humankind to “a pet or a house cat” in a future controlled by it, fired back that Zuckerberg’s “understanding of the subject is limited.”

Edging up against privacy regs

Currently, X premium and premium+ users can access two new models, Grok-2, which integrates real-time information from the X social media platform, and Grok-2 mini.

With the Grok-2 beta release in August, users gained the ability to create images – many of them highly inappropriate due to a lack of filters, as researchers were able to show this summer – from text prompts and publish them to the social platform. And by connecting real-time data from X, Grok could respond to prompts about current events as they unfold.

Government observers, citing a looseness and general disdain for safe AI and social media standards as pervading Grok, reacted. In addition to requisitions from the European Union for potential violations of the Digital Safety Act, nine states sent letters about 2024 election misinformation created using the platform.

“X has the responsibility to ensure all voters using your platform have access to guidance that reflects true and accurate information about their constitutional right to vote,” Minnesota Secretary of State Steve Simon said in an August 5 letter.

“We urge X to immediately adopt a policy of directing Grok users to CanIVote.org when asked about elections in the U.S.”

Harvesting data and when HIPAA applies

At least one privacy expert has raised concerns that HIPAA-covered entities participating in efforts to improve Grok’s accuracy on healthcare data via social media could compromise patient privacy in the U.S.

And European government leaders previously questioned developer xAI for suspected violations of GDPR. On August 6, the Irish Data Protection Commission asked the High Court of Ireland to stop the social media network from harvesting account holder data to train the Grok LLM.

However, if users ask Grok something, it does not automatically post to the X social media platform – users would need to do that directly at this time, said Lee Kim, senior principal for cybersecurity and privacy at HIMSS, the parent company of Healthcare IT News.

“Right now, you can toggle on or off — show your posts as well as your interactions, inputs and results with Grok to be used for training and fine-tuning,” Kim said Monday, sharing a screenshot. “You can also choose to delete your conversation history.”

While users can make some choices according to xAI’s privacy policy, “they need to be aware of how their data is used and handled,” said Kim. HIPAA can apply to data shared on social media platforms if a covered entity discloses protected patient information, she explained.

“It all depends on who is disclosing the information,” said Kim. “If it’s a covered entity, business associate or organized health care arrangement, HIPAA does apply.”

Andrea Fox is senior editor of Healthcare IT News.

Email: afox@himss.org

Healthcare IT News is a HIMSS Media publication.